Natural Language Processing is a subfield of Artificial Intelligence and has already existed for some time. Recent years, there have been many developments and nowadays not only human language can be analyzed, but it can also be generated by AI models. There are several so-called language models able to generate human-like texts. Probably the most […]

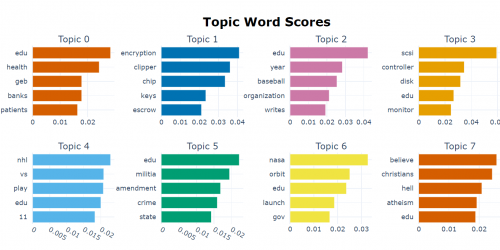

If you would like to read more about Topic Modeling, please have a look at our article ‘An in-depth Introduction to Topic Modeling using LDA and BERTopic‘ and make sure to check out the notebook generated based on a deep learning approach, BERTopic. If you are in general interested in NLP tasks then you are […]

If you would like to read more about Topic Modeling, please have a look at our article ‘An in-depth Introduction to Topic Modeling using LDA and BERTopic‘. Moreover, make sure to check out the notebook generated based on a traditional approach, LDA. If you are in general interested in NLP tasks then you are in […]

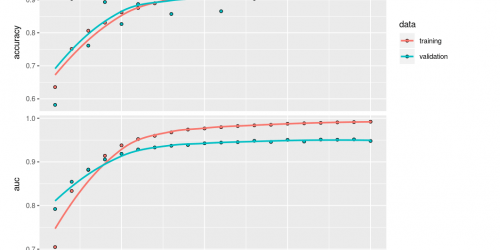

What did we learn from joining Kaggle’s Commonlit Readability prize? Well, next to having a lot of fun, it definitely helped us to explore the text analytics landscape even further. You can read our whole story in this article

For our latest Project Friday, we entered the CommonLit Readability competition on Kaggle. We learned a lot from working together on this cool NLP task. Stay tuned to see where this led us.

How to uncover the predictive potential of textual data using topic modeling, word embedding, transfer learning and transformer models with R Textual data is everywhere: reviews, customer questions, log files, books, transcripts, news articles, files, interview reports … Yet, texts are still (too) little involved in answering analysis questions, in addition to available structured data. […]

In a sequence of articles we compare different NLP techniques to show you how we get valuable information from unstructured text. About a year ago we gathered reviews on Dutch restaurants. We were wondering whether ’the wisdom of the croud’ – reviews from restaurant visitors – could be used to predict which restaurants are most likely to receive a new Michelin-star. Read this post to see how that worked out. We used topic modeling as our primary tool to extract information from the review texts and combined that with predictive modeling techniques to end up with our predictions.

We got a lot of attention with our predictions and also questions about how we did the text analysis part. To answer these questions, we explain our approach in more detail in a series of articles on NLP. We didn’t stop exploring NLP techniques after our publication, and we also like to share insights from adding more novel NLP techniques. More specifically we will use two types of word embeddings – a classic Word2Vec model and a GLoVe embedding model – we’ll use transfer learning with pretrained word embeddings and we use transformers like BERT. We compare the added value of these advanced NLP techniques to our baseline topic model on the same dataset. By showing what we did and how we did it, we hope to guide others that are keen to use textual data for their own data science endeavours.

In a sequence of articles we compare different NLP techniques to show you how we get valuable information from unstructured text. About a year ago we gathered reviews on Dutch restaurants. We were wondering whether ’the wisdom of the croud’ – reviews from restaurant visitors – could be used to predict which restaurants are most likely to receive a new Michelin-star. Read this post to see how that worked out. We used topic modeling as our primary tool to extract information from the review texts and combined that with predictive modeling techniques to end up with our predictions.

We got a lot of attention with our predictions and also questions about how we did the text analysis part. To answer these questions, we explain our approach in more detail in a series of articles on NLP. But we didn’t stop exploring NLP techniques after our publication, and we also like to share insights from adding more novel NLP techniques. More specifically we will use two types of word embeddings – a classic Word2Vec model and a GLoVe embedding model – we’ll use transfer learning with pretrained word embeddings and we use BERT. We compare the added value of these advanced NLP techniques to our baseline topic model on the same dataset. By showing what we did and how we did it, we hope to guide others that are keen to use textual data for their own data science endeavours.