Bridging the Gap: How AI Translates Sign Language into Speech

Exploring how AI-powered models transform sign language into natural-sounding spoken words

At Cmotions, we enjoy leveraging AI to solve business or societal challenges. One of the challenges we set out to address is communication accessibility for individuals who rely on sign language. What if AI could bridge the gap between sign language and spoken language, making interactions more seamless?

With that vision in mind, we embarked on a journey to explore if we can create something that is able to interpret (WL)ASL sign language and convert it into English speech. In other words, a sign-to-speech translation using AI. The complete process consists of several steps. First, we fine-tuned a pre-trained model to enable translating videos of sign language into predicted ‘glosses’ (textual representation of signs). In another article we walk you through the process of finetuning a specialized model for that. In this notebook we use that finetuned model to interpret short video clips of sign language and convert them into written glosses. These glosses are then fed into a large language model (LLM), which transforms them into grammatically correct English sentences. Finally, the written output is converted into speech, making sign language accessible through audio. In short, below we show how we can use the model we have trained to (1) transform a handsign video into glosses (the words or terms expressed), then (2) into a sentence everyone can understand and finally (3) into spoken text.

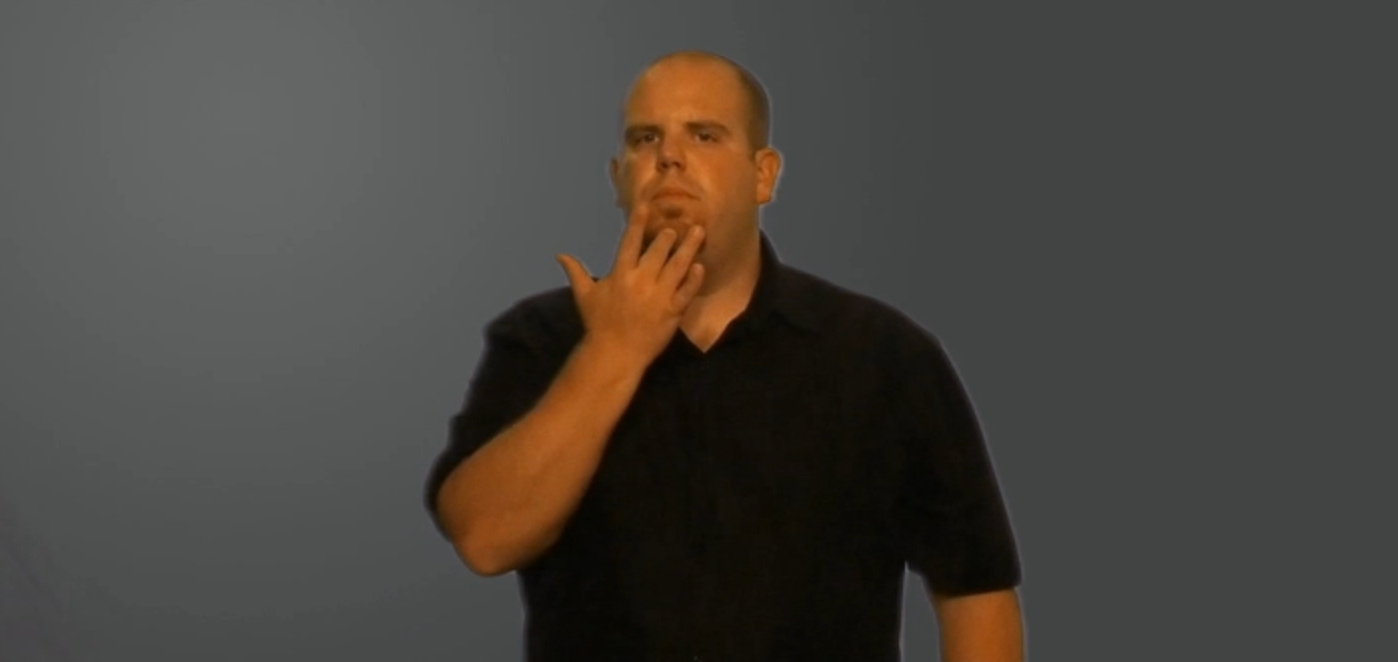

Follow along to see how a video of someone signing the sentence “Where do you prefer to go on vacation?” is converted into a clear, natural-sounding audio note.

Let’s start by displaying the video. As you can see this video is a combination of the separate expressed signs. This also shows how we have currently trained our model: on separate signs expressed. Our model classifies the most probable sign (or ‘gloss’ in sign language lingo) expressed.

Tools & Packages

What packages do we use? Here’s an overview:

- Torch: Torch is an open-source machine learning library, the backbone for many deep learning use cases.

- Ollama: Ollama is a lightweight, extensible framework for building and running language models efficiently.

- Langchain: LangChain is a framework for developing applications powered by large language models (LLMs).

In the three steps (video to glosses, glosses to natural text sentence, and natural text sentence to audio) we use different tools and models:

- Transformers, opencv-python, torchvision and pytorchvideo: to apply our finetuned model to the new video file snippets to predict the glosses.

- Ollama and langchain: to transform the predicted glosses to a natural sentence.

- gTTS and ipython audio: to create and play the natural sentence as an audio file.

You can learn more about these packages in the following resources:

- https://ipython.org/ipython-doc/3/api/generated/IPython.display.html

- https://pytorch.org/

- https://ollama.com/

- https://www.langchain.com/

- https://pypi.org/project/opencv-python/

- https://pytorch.org/vision/stable/index.html

- https://pypi.org/project/pytorchvideo/

- https://pypi.org/project/gTTS/

# make sure you have ollama installed and pulled the llm used: %sh ollama pull qwen2.5:32b-instruct

import torch

import os

import pytorchvideo

from transformers import VideoMAEImageProcessor, VideoMAEForVideoClassification

from pytorchvideo.transforms import ApplyTransformToKey, UniformTemporalSubsample

from torchvision.transforms import Compose, Lambda, Normalize, Resize

import pytorchvideo.transforms as transforms

import pytorchvideo.data

from pytorchvideo.data.encoded_video import EncodedVideo

import imageio

import numpy as np

from IPython.display import Image

import glob

import itertoolsCode snippet 1: Import the packages we use

Data Collection

For this project we aim to develop an AI-driven tool capable of interpreting (WL)ASL sign language and converting it into English speech. Interpreting short video clips of sign language and convert them into written glosses.

What is a gloss?

“When a word is associated with a sign it’s called a GLOSS: In simplest terms, a GLOSS is a label. In ASL it is an English word or words that we use to name ASL signs so that we can talk about these signs. The word or words associated with that sign do not represent the full meaning of the sign; at best they approximate its meaning. A GLOSS is a label with very weak adhesive, it’s not stuck on very securely. Some signs have several different possible glosses. For instance, the words: “IMPORTANT”; “WORTH” and “VALUE” could all be used to label the same ASL sign a gloss is a brief notation, especially a marginal or interlinear one, of the meaning of a word or wording in a text.” – Rick Mangan 2002

Training Data

To train a model that can translate a video fragment of a sign to the most probable gloss, we use the dataset “Word-level Deep Sign Language Recognition from Video: A New Large-scale Dataset and Methods Comparison”. This WLASL dataset is the largest video dataset for Word-Level American Sign Language (ASL) recognition, which features 2,000 common different words in ASL.

Connect to blob storage

To develop our solution, we created a subset of signs from this huge dataset. Based on the subset (29 hand getures) we split the data into training, testing and validation to develop and test our finetuned model.

Load finetuned-trained model

The model was finetuned based on the selection of the training data. In our other blog we show how we finetuned the model to predict glosses. Here we load our model and apply it to a sequence of glosses. Now we want to use it in practice! Since we used a pretrained open weights model, which we could download and finetune using the Transformers package, we can use the same package to use the model for inference (applying it to our new series of sign videos).

from transformers import VideoMAEImageProcessor, VideoMAEForVideoClassification

model_ckpt = '/dbfs/mnt/handsigntospeech/Models/-sign_finetuned-2024-11-03T08/best_model/'

image_processor = VideoMAEImageProcessor.from_pretrained(model_ckpt)

model = VideoMAEForVideoClassification.from_pretrained(

model_ckpt,

ignore_mismatched_sizes=True,

)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = model.to(device)Code snippet 2: Load the model we finetuned

Pre-processing

An important step before we can apply our model to the sequence of sign videos, we have to preprocess those videos. This preprocessing transforms the videos in the format similar to how the training videos are preprocessed before we finetuned the Transformer model. The following custom function pre-processses the videos in terms of size and encoding, in order to be classified.

# Defaults for all videos

mean = image_processor.image_mean

std = image_processor.image_std

clip_duration = 1.71

# Define the inference function for a single video

def predict_video_class(video_path, model=model):

# Set size for resize transform

if "shortest_edge" in image_processor.size:

height = width = image_processor.size["shortest_edge"]

else:

height = image_processor.size["height"]

width = image_processor.size["width"]

resize_to = (height, width)

# Define video transformations

val_transform = Compose([

UniformTemporalSubsample(model.config.num_frames),

Lambda(lambda x: x / 255.0),

Resize(resize_to, antialias=True),

Lambda(lambda x: x.permute(1, 0, 2, 3)),

Normalize(mean, std)

])

# Load and decode the video

video = EncodedVideo.from_path(video_path)

# Extract a clip from the video

video_data = video.get_clip(start_sec=0, end_sec=clip_duration)

# Apply transformations to the video

video_frames = video_data['video']

video_frames = val_transform(video_frames)

# Add batch dimension (batch_size=1) before passing to the model

video_frames = video_frames.unsqueeze(0) # Shape: [1, T, C, H, W]

# Run inference on the model

video_frames = video_frames.to(device)

# Run inference on the model

with torch.no_grad():

output = model(pixel_values=video_frames) # Pass inputs into model

# Extract logits and get the predicted class

logits = output.logits

predicted_class_idx = logits.argmax(-1).item()

predicted_class_label = model.config.id2label[predicted_class_idx]

return predicted_class_labelCode snippet 3: Function to preprocess video and score with our finetuned model

Inference

Now that we have the packages loaded and the preprocessing function ready, we can load the separate sign videos (GLOSS_1, GLOSS_2, GLOSS_3 and GLOSS_4 ) , preprocess them and run them through our finetuned model. This will result in the glosses.

import requests

import os

# Blob storage URL

blob_path = 'https://bhciaaablob.blob.core.windows.net/handsigntospeech/article sentence/'

video_names = ['GLOSS_1.mp4', 'GLOSS_2.mp4', 'GLOSS_3.mp4', 'GLOSS_4.mp4']

# Create URL list

video_list = [blob_path + video_name for video_name in video_names]

# Local storage path

save_dir = '/dbfs/mnt/handsigntospeech/'

# Ensure directory exists

os.makedirs(save_dir, exist_ok=True)

gloss_list = []

for video_name, video_url in zip(video_names, video_list):

try:

# Download video

response = requests.get(video_url)

response.raise_for_status() # Raise error if request fails

# Save video with unique filename

local_video_path = os.path.join(save_dir, video_name)

with open(local_video_path, 'wb') as f:

f.write(response.content)

# Process video using model

predicted_class = predict_video_class(local_video_path, model)

gloss_list.append(predicted_class)

print(f"Processed {video_name} -> Predicted class: {predicted_class}")

except requests.RequestException as e:

print(f"Failed to download {video_name}: {e}")

except Exception as e:

print(f"Error processing {video_name}: {e}")

# Print final results

print("Final Gloss List:", gloss_list)

2/14/2025 (6s)

19

19

import requests

import os

# Blob storage URL

blob_path = 'https://bhciaaablob.blob.core.windows.net/handsigntospeech/article sentence/'

video_names = ['GLOSS_1.mp4', 'GLOSS_2.mp4', 'GLOSS_3.mp4', 'GLOSS_4.mp4']

# Create URL list

video_list = [blob_path + video_name for video_name in video_names]

#> Processed GLOSS_1.mp4 -> Predicted class: where

#> Processed GLOSS_2.mp4 -> Predicted class: vacation

#> Processed GLOSS_3.mp4 -> Predicted class: you

#> Processed GLOSS_4.mp4 -> Predicted class: prefer

#> Final Gloss List: ['where', 'vacation', 'you', 'prefer']Code snippet 4: Load the sign language videos and process them with our finetuned model

This result already looks pretty understandable right? ‘where’, ‘vacation’, ‘you’ and ‘prefer’ are the predicted glosses. Maybe you can guess the meaning of this set of glosses, but you will also recognize that its not that ‘fluent’ yet and can lead to misinterpretation quite easily. In American English the grammatical structure is as follows: Subject-Verb-Object (SOV) word order, however in ASL the word order depends on the topic-comment relations. Therefore, we can find multiple word orders, such as Subject-Verb-Object or Subject-Verb order and also Time-Subject-Verb-Object or Time-Subject-Verb word order. Luckily, uch in depth knowlegde of sign language is to a great extent present in most powerful pretrained language models. Therefore, in the next step we take advantage of the great power of large language models to transform the list of glosses into a naturally sounding sentence that everyone can understand.

We use the popular langchain package in combination with ollama – the hub where many large language models are made easily accessible. The template contains the prompt we engineered (prompt engineering is short for trial and error to end up with a prompt that makes the LLM do what you want it to do) to translate the gloss texts to a fluent sentence representing the meaning of the video. We use the LLM qwen2.5 32b for this task since it has a good performance in many tasks and is not too big in size (still 32 billion parameters… but had a good performance compared to other models at time of our project).

The last step is to transform the written text into audio. We can use Google’s great gtts package (Google Text To Speach) to do this with one line of code. Listen to the result below!

from typing import List

from langchain import PromptTemplate, LLMChain

from langchain_ollama import OllamaLLM

from gtts import gTTS

import os

import IPython

def gloss_list_to_speech(gloss_text_list: str, llm: OllamaLLM, template: PromptTemplate) -> gTTS:

"""

Converts a sequence of glosses into natural language text and then into speech.

Args:

gloss_text_list (List[str]): A list of strings of glosses.

llm (OllamaLLM): The language model to use for converting glosses to natural language.

prompt (PromptTemplate): The prompt template to use for the language model.

Returns:

gTTS: The generated speech object.

"""

prompt = PromptTemplate(template=template, input_variables=["text"])

llm_chain = LLMChain(prompt=prompt, llm=llm)

gloss_text = ' | '.join(gloss_text_list)

natural_text = llm_chain.invoke(gloss_text)['text']

language = 'en'

natural_audio = gTTS(text=natural_text, lang=language, slow=False)

audio_name = gloss_text.replace(' | ','_') + '.mp3'

natural_audio.save(audio_name)

IPython.display.display(IPython.display.Audio(audio_name, autoplay=True))

return natural_audio

llm = OllamaLLM(model="qwen2.5:32b-instruct")

template = """

[INST] <<SYS>>

You are a professional WLASL (World Level American Sign Language) sign language translator. You receive a sequence of glosses, each delimited by a pipeline (|) symbol. Each gloss represents a word or phrase that conveys the meaning of a sign in WLASL. Your task is to convert the sequence of glosses into a well-structured, concise sentence that can be easily understood by someone who does not know sign language. Ensure the sentence is grammatically correct and natural in tone. Important: the output must not contain the pipeline (|) delimiter.

Here are some examples:

input: 'You | Name | What | You'

output: 'What is your name?'

input: 'You | Live | Where | You'

output: 'Where do you live?'

Only provide back the requested output, do not introduce your answer! Here is the glosses string, between three backticks: <</SYS>>

```{text}```[/INST]

Your answer:

"""

gloss_list_to_speech(gloss_list, llm, template)Code snippet 5: Translate gloss labels to natural text and audio

Here is the result of our pipeline, that transforms a handsign video into gloss labels, those labels into a sentence everyone can understand and finally into spoken text:

We hope you like how in our project we combined a pretrained model and public data to fine tune a model, apply it to videos and use large language models and text-to-speech technologies available to translate sign videos to fluent, spoken text. This can help those who are dependent on sign language to communicate more easily with the big audience many of them deserve, not limited by the knowledge their audience has of sign language. This is how techniques can help improving life and thats why we love it!